Log Processing Sub Configuration - Upload Sample Log File

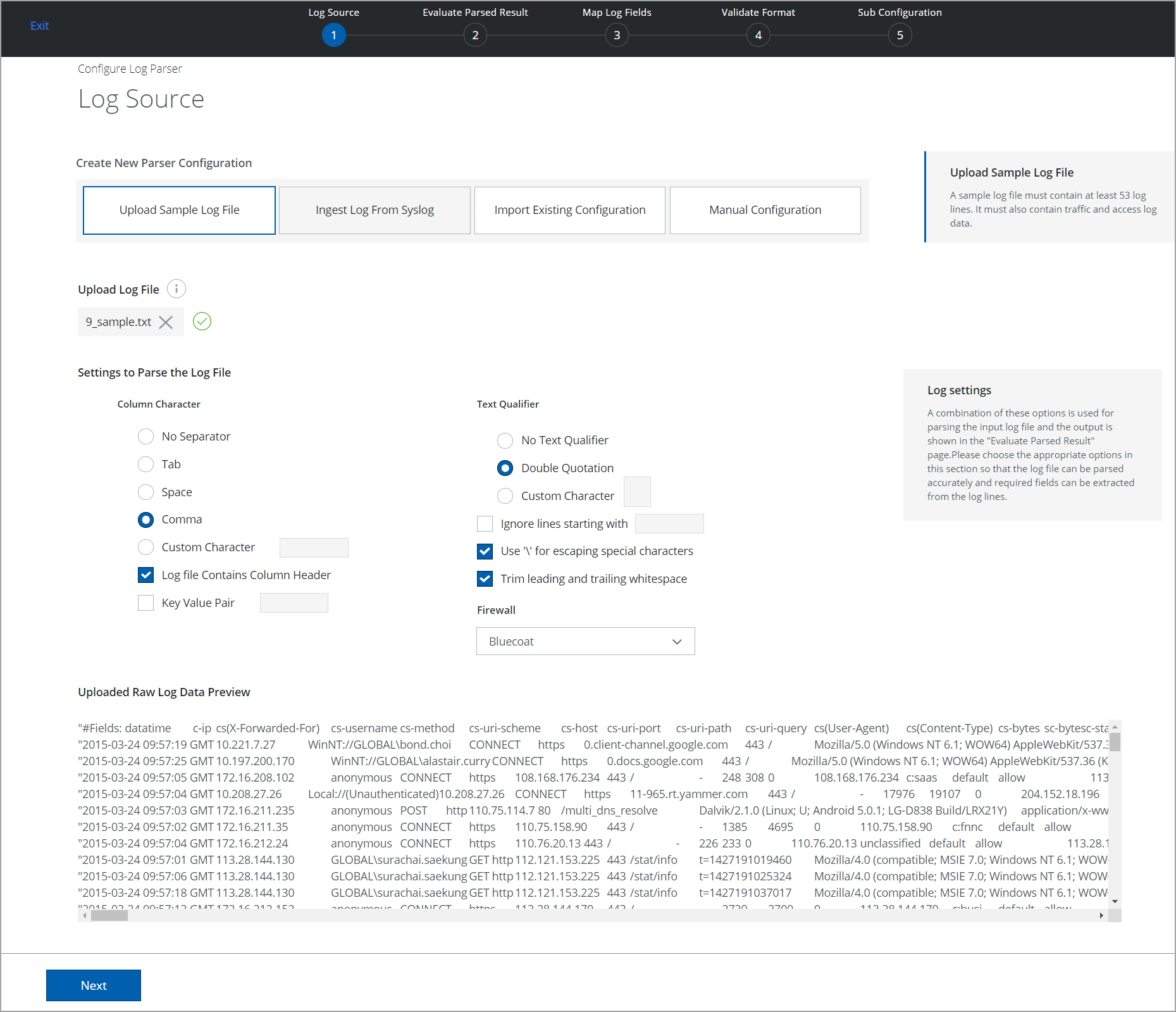

Upload Sample Log File

To configure the Log Parser using a sample log file, perform the following steps:

- Go to Settings > Infrastructure > Cloud Connector.

- Select the Cloud Connector instance you want to configure.

- Click the Log Processing tab.

- Click Add new Sub-Configuration.

- Click Upload Sample Log File.

NOTES:

- The maximum size limit to upload a sample log file is 5 MB.

- The uploaded log file is NOT stored or persisted in Skyhigh CASB. If you lose your browser session or if you need to edit your parser configuration, you must upload it again.

- Browse and select a sample log file from your local machine. Click Upload.

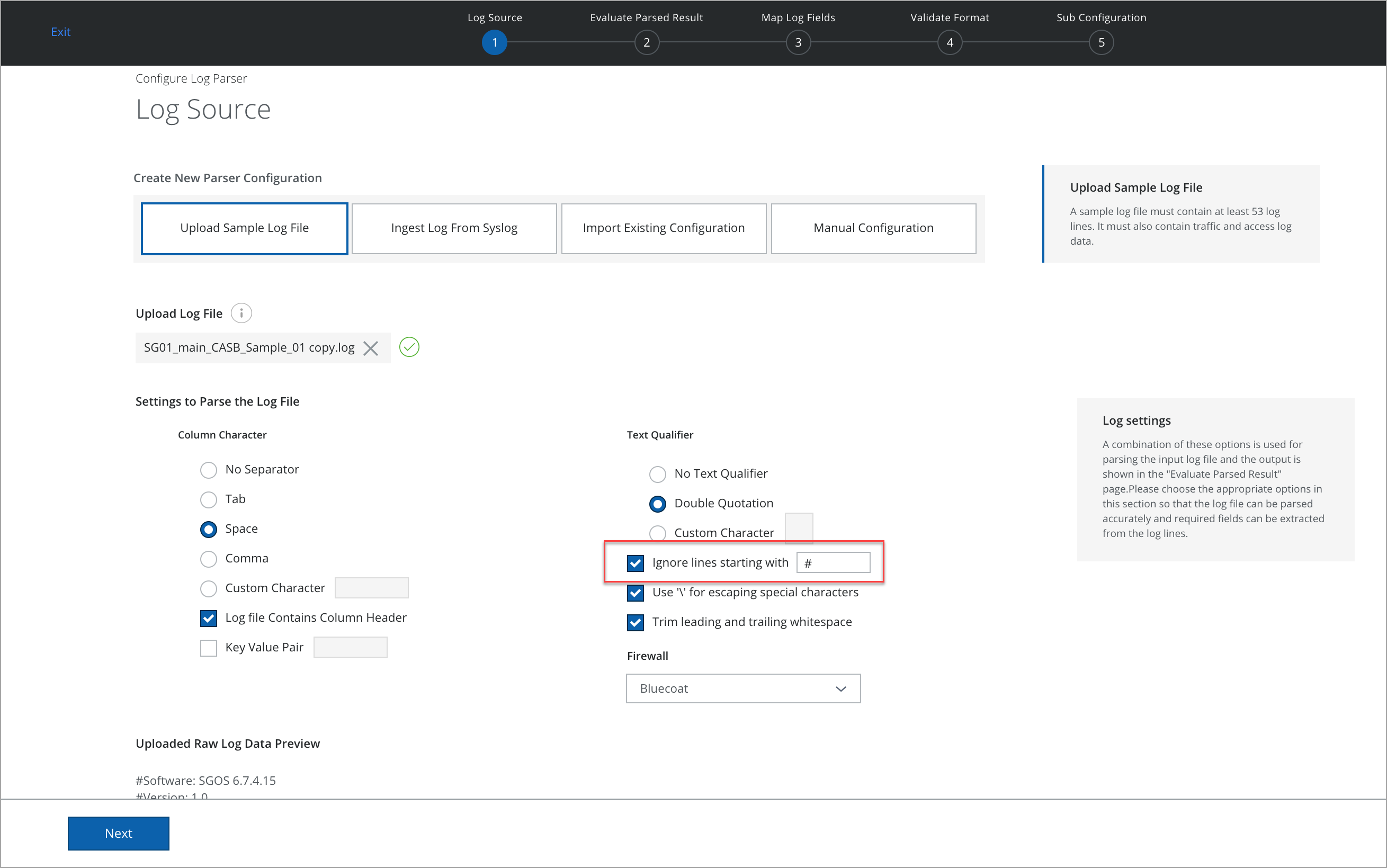

- Once you upload the log file, a preview of the uploaded raw log data and Log Parser settings are displayed. You can customize the Log Parser settings based on your raw log data format. For details, see Settings to Parse the Log file.

- Click Next.

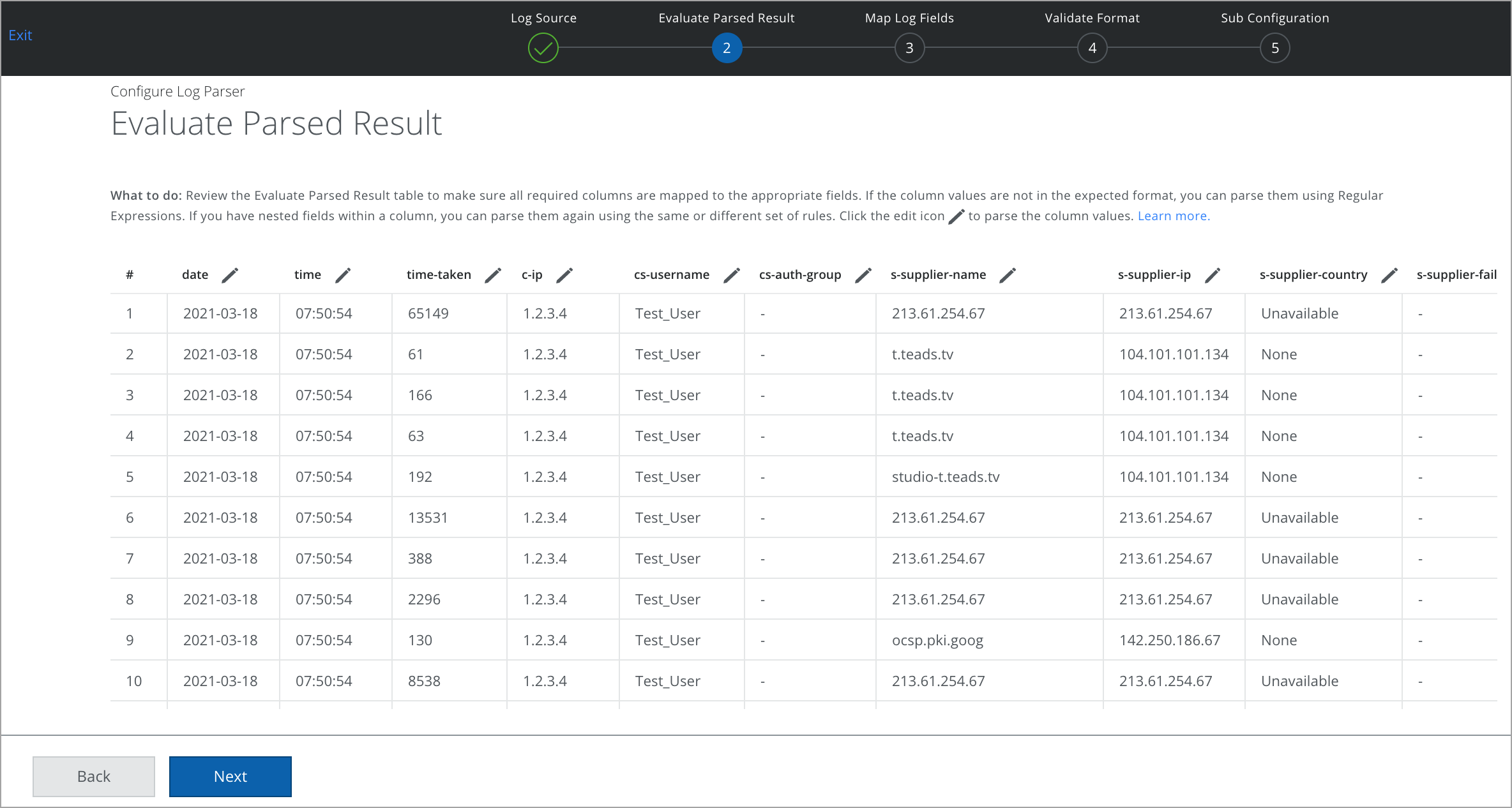

- On the Evaluate Parsed Result page, verify the parsed result. To modify any column, select the Edit pencil icon. For details, see Evaluate Parsed Result.

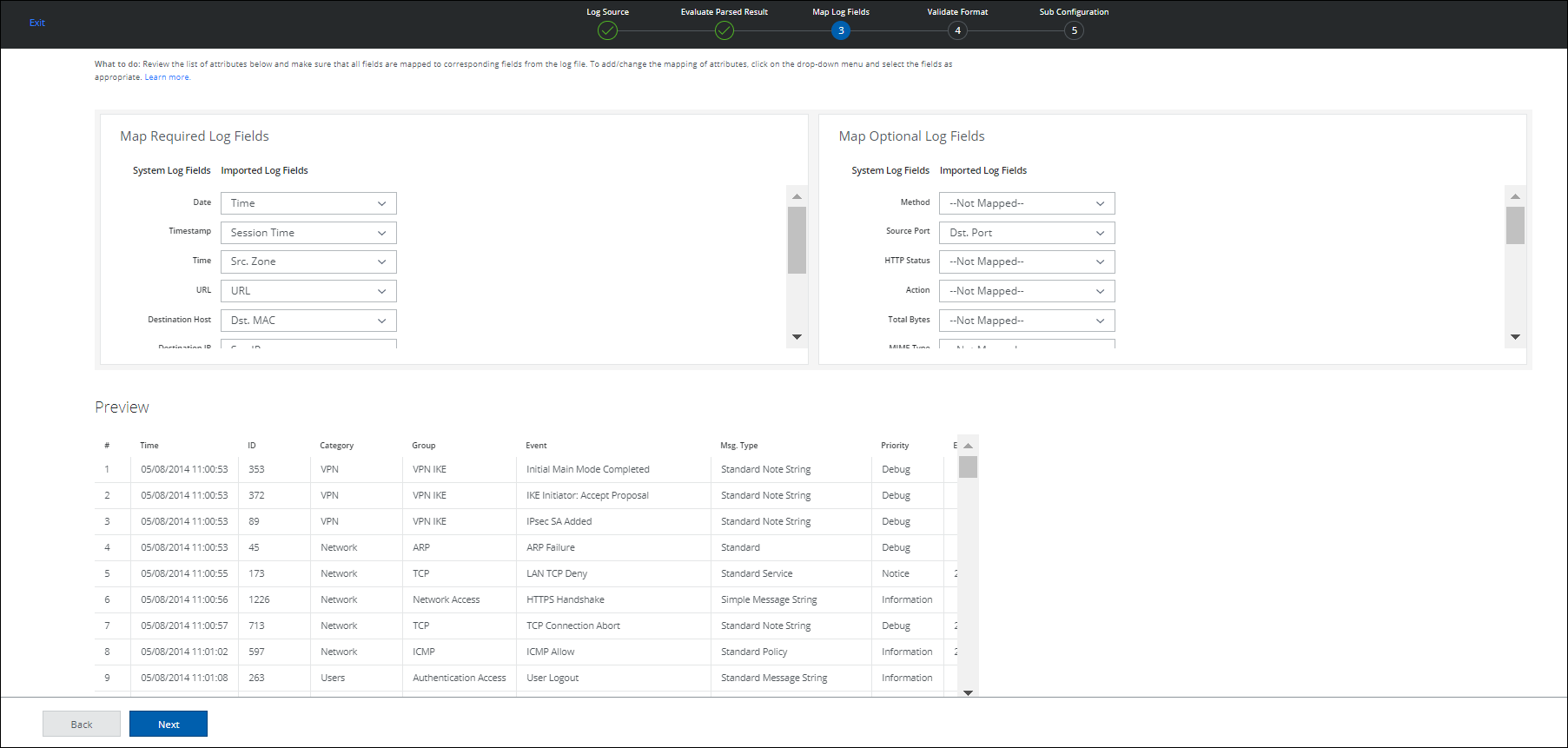

- On the Map Required Log fields page, review the list of attributes and make sure that the required fields are mapped to corresponding fields from the log file. To add or change the mapping of attributes, see Mapping Log Fields. Later, you can view the mapped log fields in the Preview section.

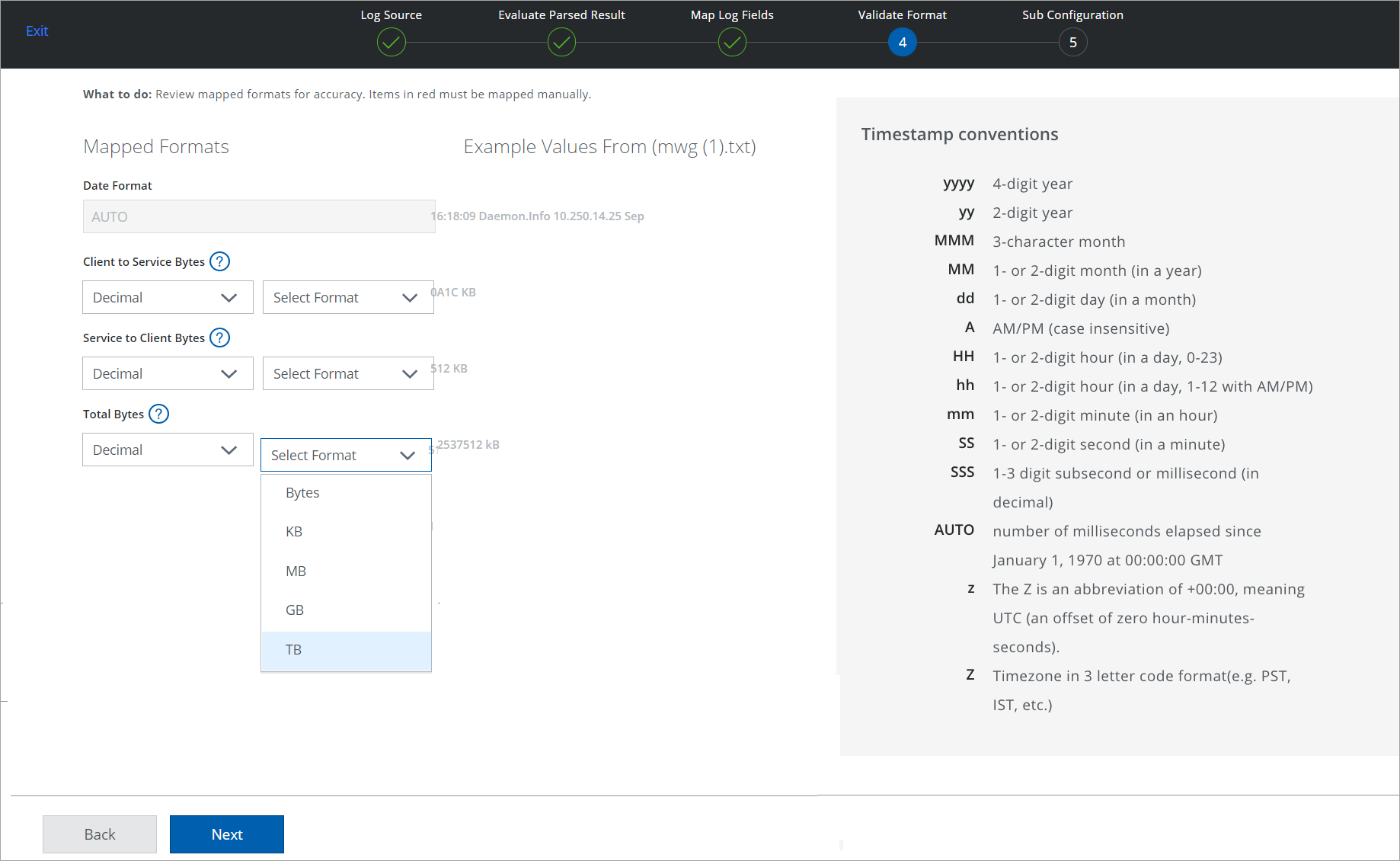

- On the Validate Format page, review mapped formats for accuracy. Items in red must be mapped manually. For details, see Validate Mapped Formats.

NOTE: The Date Format is auto-populated based on the Date or Timestamp Fields mapped on the previous page.

- On the Sub-Configuration page, you can edit, review, and save your configuration. For details, see Sub-Configuration.

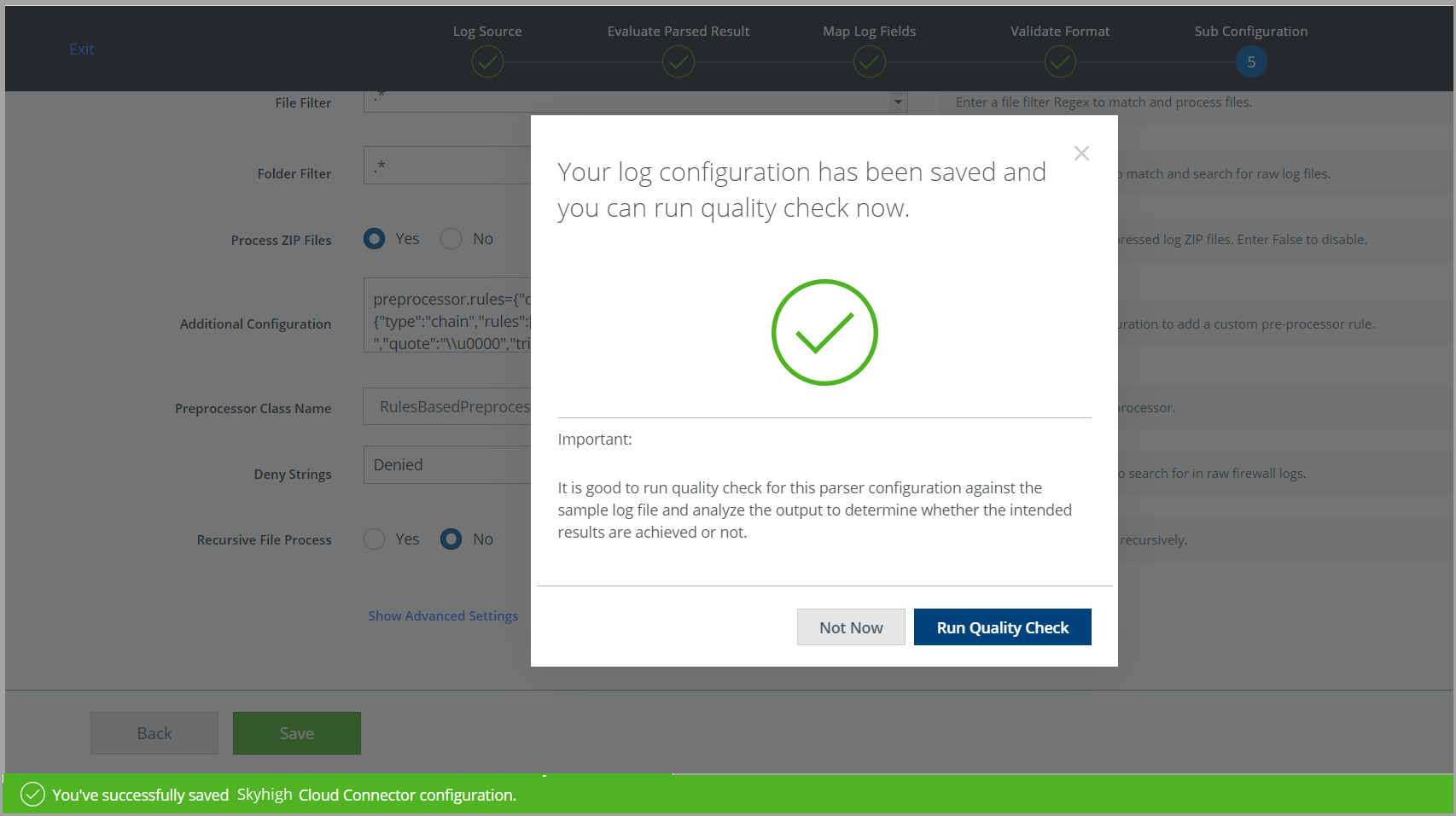

- Once the log parser configuration is saved, you can see the following successful message.

- To run the Quality Check now for this parser configuration, click Run Quality Check. To run the Quality Check later, click Not Now. To learn more, see Log Parser Quality Check.

Settings to Parse the Log File

A combination of options is used to parse the uploaded sample log file. View the output on the Evaluate Parsed Result page. Select the appropriate options in this section to accurately parse the sample log file and extract the required fields to create your Log Parser configuration.

Column Character

- No Separator. Select No Separator if your log files have no separators used.

- Tab. Select Tab if your log files are separated by a tab.

- Space. Select Space if your log files are separated by a space.

- Comma. Select Comma if your log files are separated by a comma.

- Custom Character. Click the radio button and enter any custom character indicating a separator for the data in the log file.

- Log Files contains Column Header. Activate the checkbox if the first line of your log files contains a column header and you want to exclude this data.

- Key-Value Pair. Activate the checkbox and enter any key-value pair to be ignored from the log data files. For example, enter key-value pair |=; present in your log files.

Text Qualifier

- No Text Qualifier. If this option is selected, then no qualifiers are applied.

- Double Quotation. Select this option to distinguish the contents of a text field between double quotations.

- Custom Character. Select this option to distinguish the contents of a text field between custom characters.

- Ignore lines starting with. Activate this checkbox and enter the starting character such as #,-,$ to exclude the lines starting with the entered character for data processing.

- Use '\' for escaping special characters. Activate this checkbox to interpret the escape special characters in the parse string. For example, if this option is selected, "\n" is interpreted as a new line.

- Trim leading and trailing whitespace. Activate this checkbox to trim leading and trailing white space.

Firewall. Select any item in the Firewall to add deny strings automatically to the Sub-Configuration settings.

NOTE: Under Text Qualifier, ‘Ignore lines starting with’ option do not ignore the Header starting with "#Fields" if found in logs.

For example, log lines starting with "#Fields" remove only "#Fields" from the log line. This is to handle the Broadcom logs with a header.

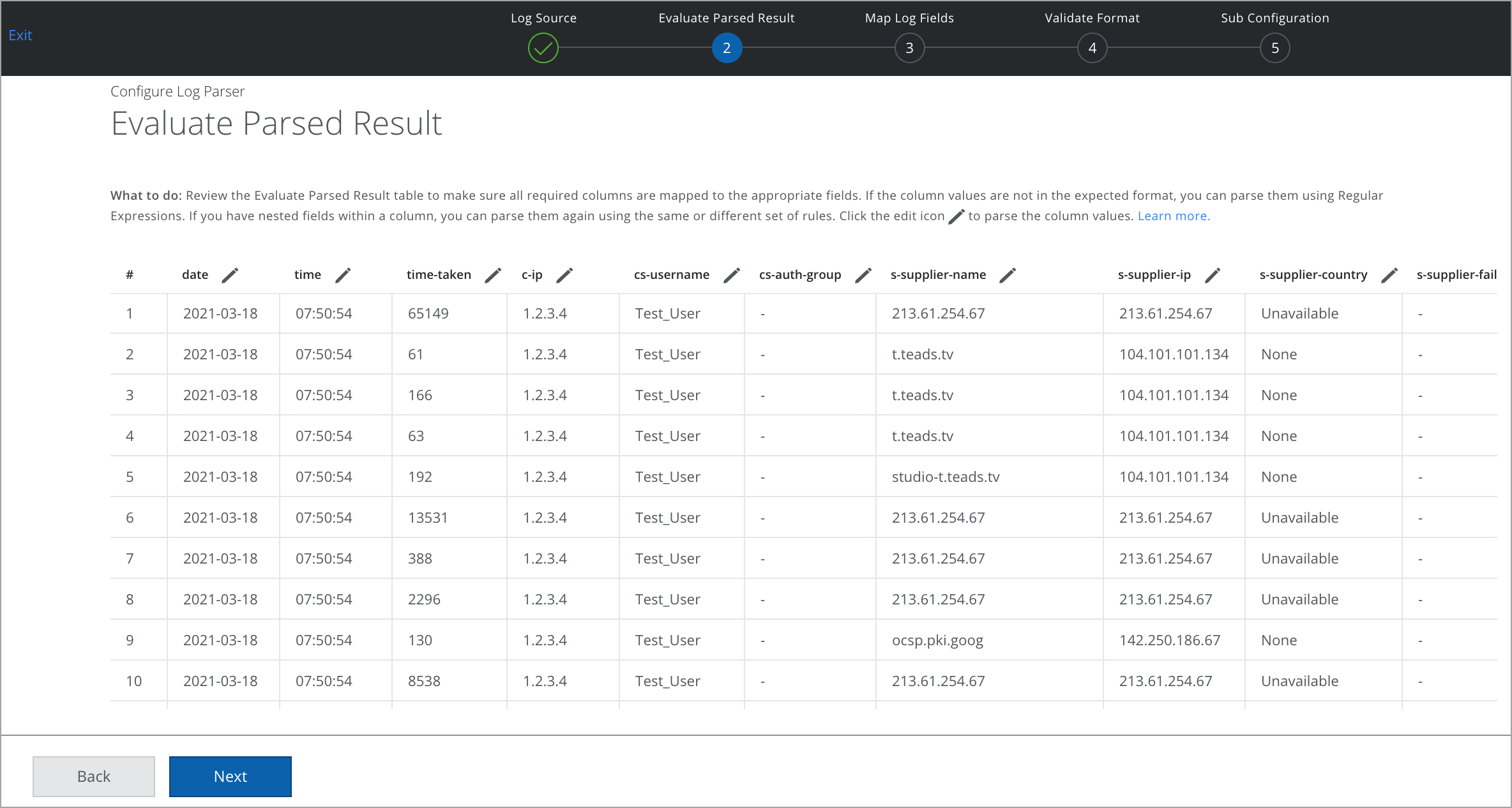

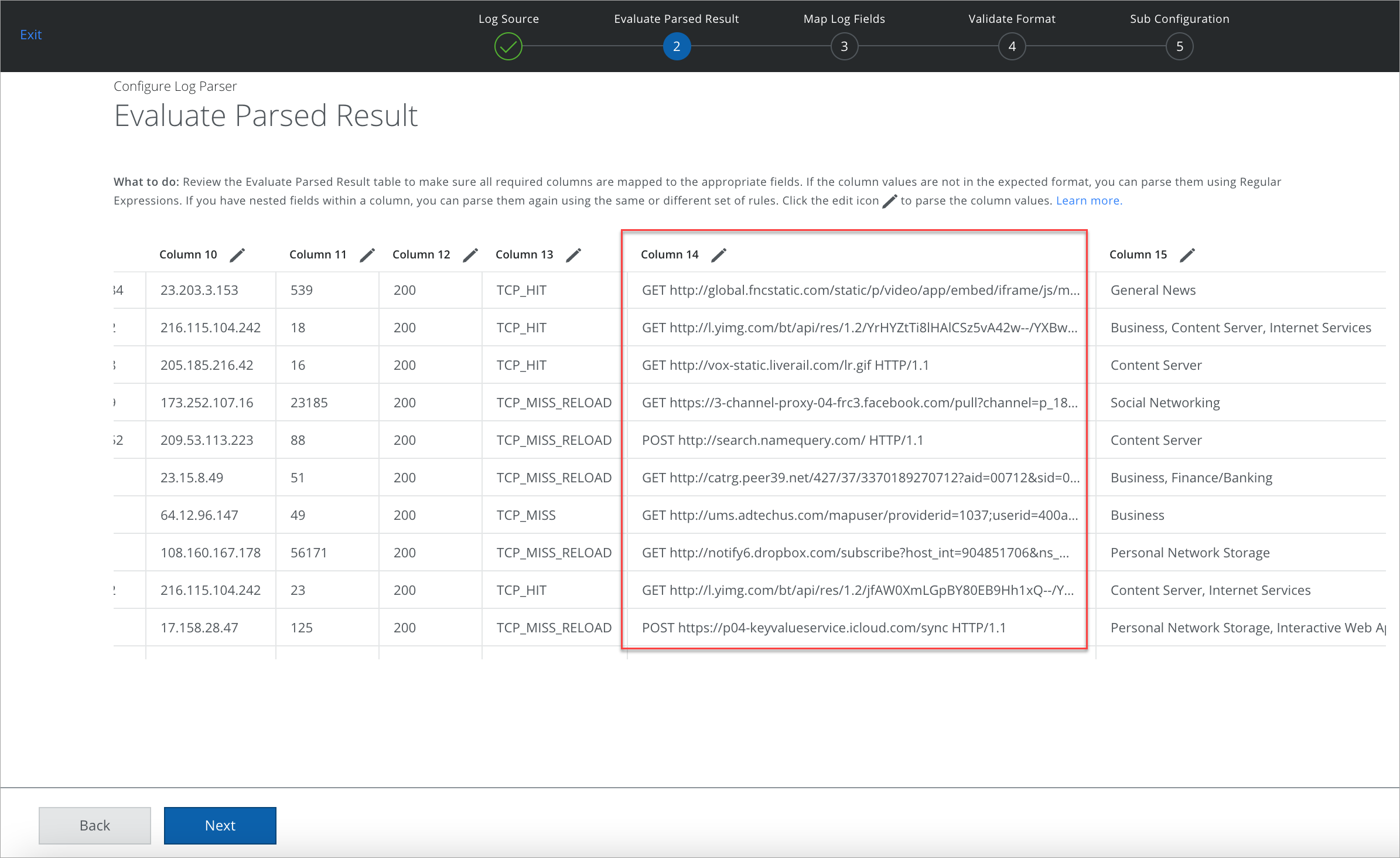

The processed data on the Evaluate Parsed Result page.

Evaluate Parsed Result

Review the Evaluate Parsed Result table to make sure all required columns are mapped to the appropriate fields. If the column values are not in the expected format, you can parse them using Regular Expressions. If you have nested fields within a column, you can parse them again using the same or different set of rules. Click the Edit pencil icon to parse the column values.

You can parse the values using the following two options:

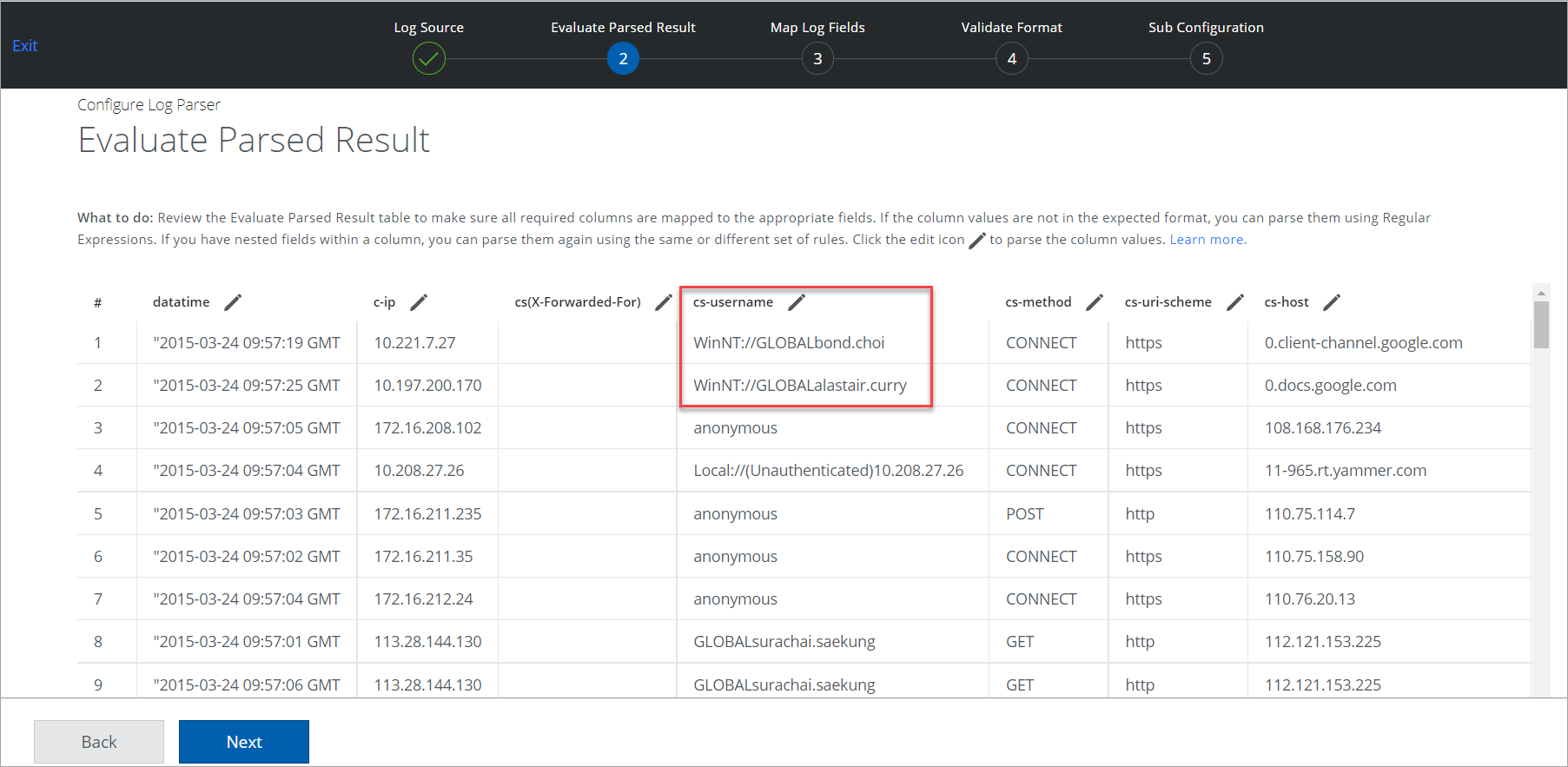

Parse Using Regex

You can use a Regex match to eliminate and replace an unwanted element from the column entry.

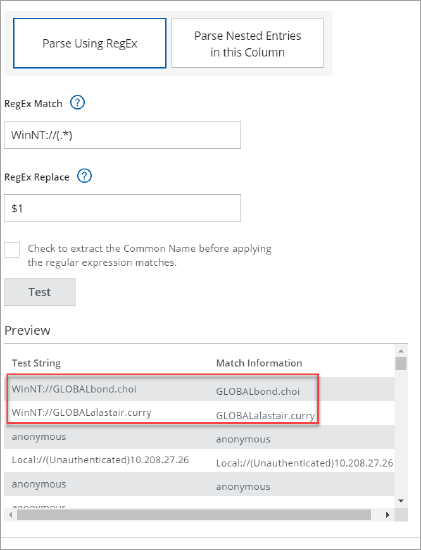

For example, you have a username with the prefix in the column cs: username and to eliminate the prefix WinNT:// from the username, perform these steps:

- Click the Edit pencil icon.

- Select Parse Using RegEx and configure the following:

- Regex Match. Enter the value that needs to be replaced or eliminated. For example, WinNT://(.*)

- Regex Replace. Enter the replace element. For example, $1

- Click Test.

The Preview pane displays the Regex Replace results. The Username WinNT://GLOBALbond.choi is now changed to GLOBALbond.choi by eliminating prefix.

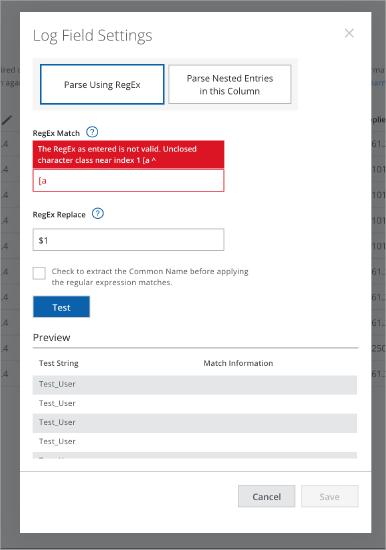

NOTE: If you have entered the invalid Regex, then the following error message is displayed.

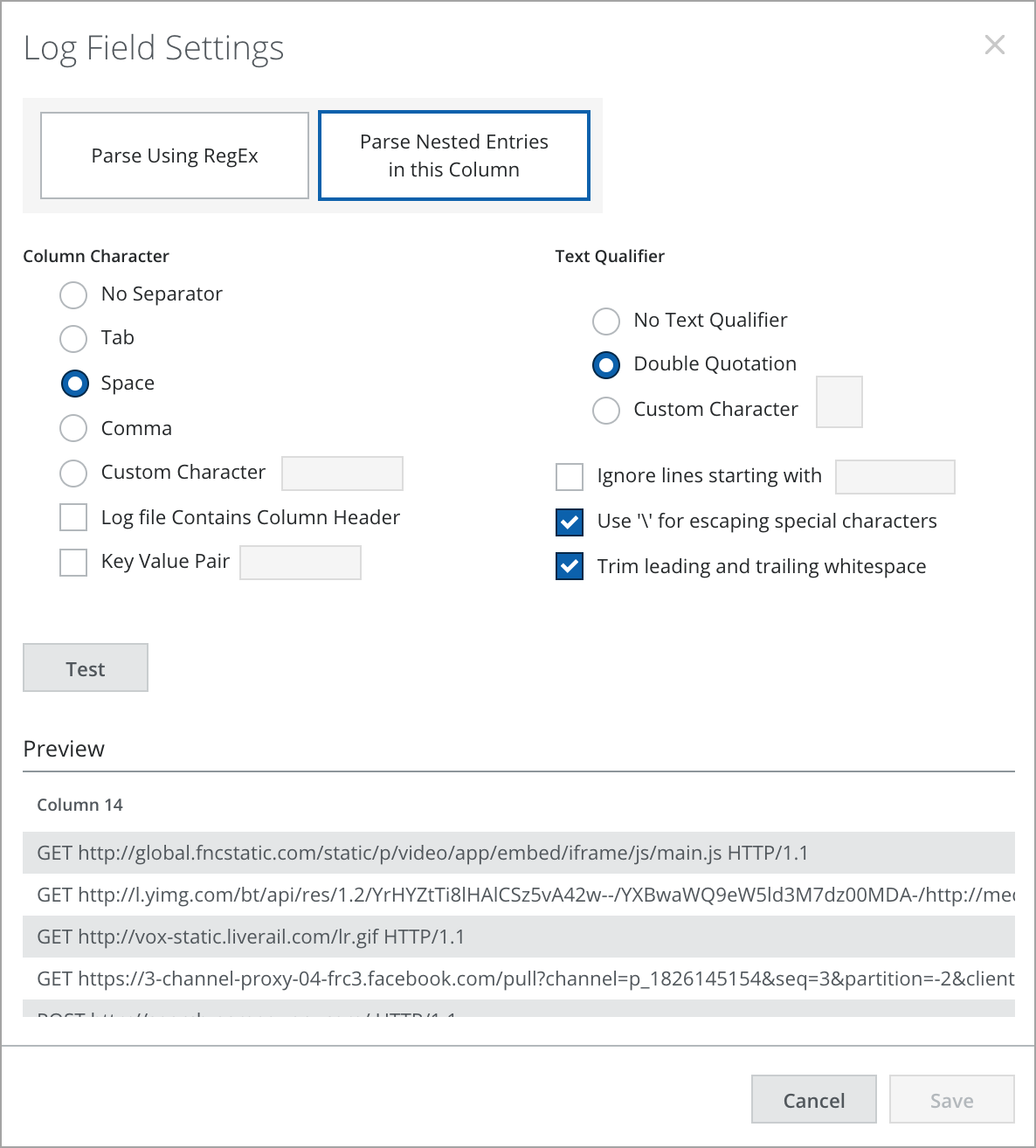

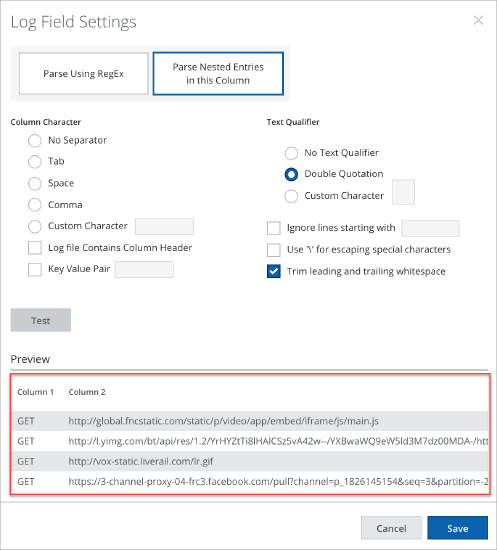

Parse Nested Entries in this Column

You can use this option to break a complex column entry into a simpler or singular entity to feed the Log Parser's required information. For example, you have column 14 with method and URL in the single column and to break the information into two separate columns, perform these steps:

- Click the Edit pencil icon.

- Select Parse Nested Entries in this Column.

- Select a delimiter for the column. For example, select Space.

- Click Test.

The Preview pane displays the column entry separated into a simpler column entity. Column 1 displays the method name and Column 2 displays the URL. A column can further be broken down into simpler entities using the same steps.

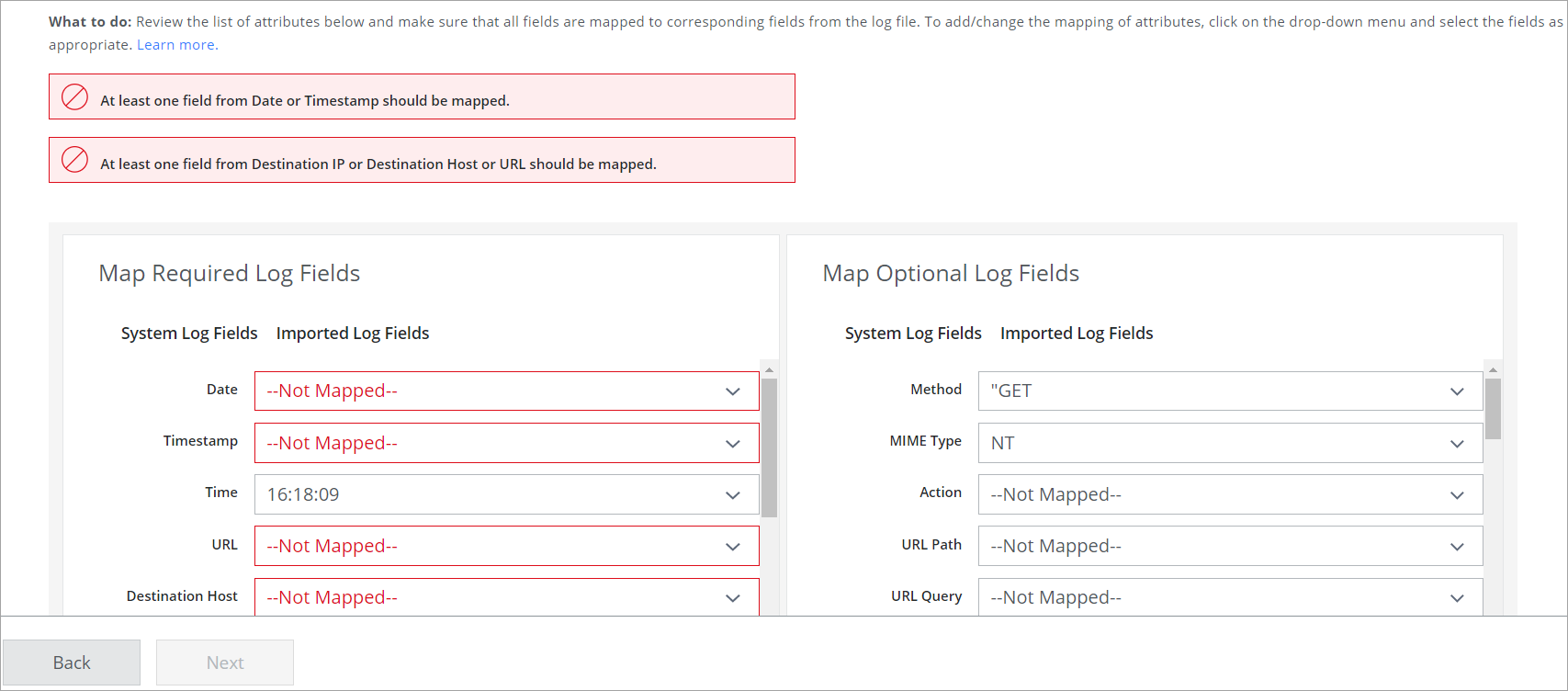

Mapping Log Fields

Review the log fields below and map the corresponding fields from the log file. The required fields must be mapped otherwise you can see the following error message:

Map Required Log Fields. These standard fields represent the header name in your log file. To add or change the attribute mapping, click the menu and select the appropriate fields corresponding to your log file.

- Date

- Timestamp

- Time

- URL

- Destination Host

- Destination IP

- Service to Client Bytes

- Source IP

- Client to Service Bytes

- Source User

Map Optional Log Fields. These optional fields represent the header name in your log file. If your log file contains any of these optional fields, you can map attributes for appropriate fields. If you do not have a header name in your log file, you can select the Custom fields and map attributes corresponding to your log file.

- Method

- MIME Type

- Action

- URL Path

- URL Query

- Protocol

- Destination Port

- User Agent

- HTTP Status

- Source Port

- Status

- Time Taken

- Total Bytes

- Port

- Protocol Add-on

- Referral

- Raw

- Session ID

- Custom 1

- Custom 2

- Custom 3

- Custom 4

- Custom 5

Validate Mapped Formats

Review mapped formats for accuracy. Items in red must be mapped manually.

- Date Format

- Client to Service Bytes

- Service to Client Bytes

- Total Bytes

NOTE: You should either map:

- Both Date and Time (if Date and Time information are present in two separate columns.)

or - Only Date (if a column has both Date and Time information in the same Column.)

or - Only Timestamp (if a column has Timestamp in EPOCH format.)

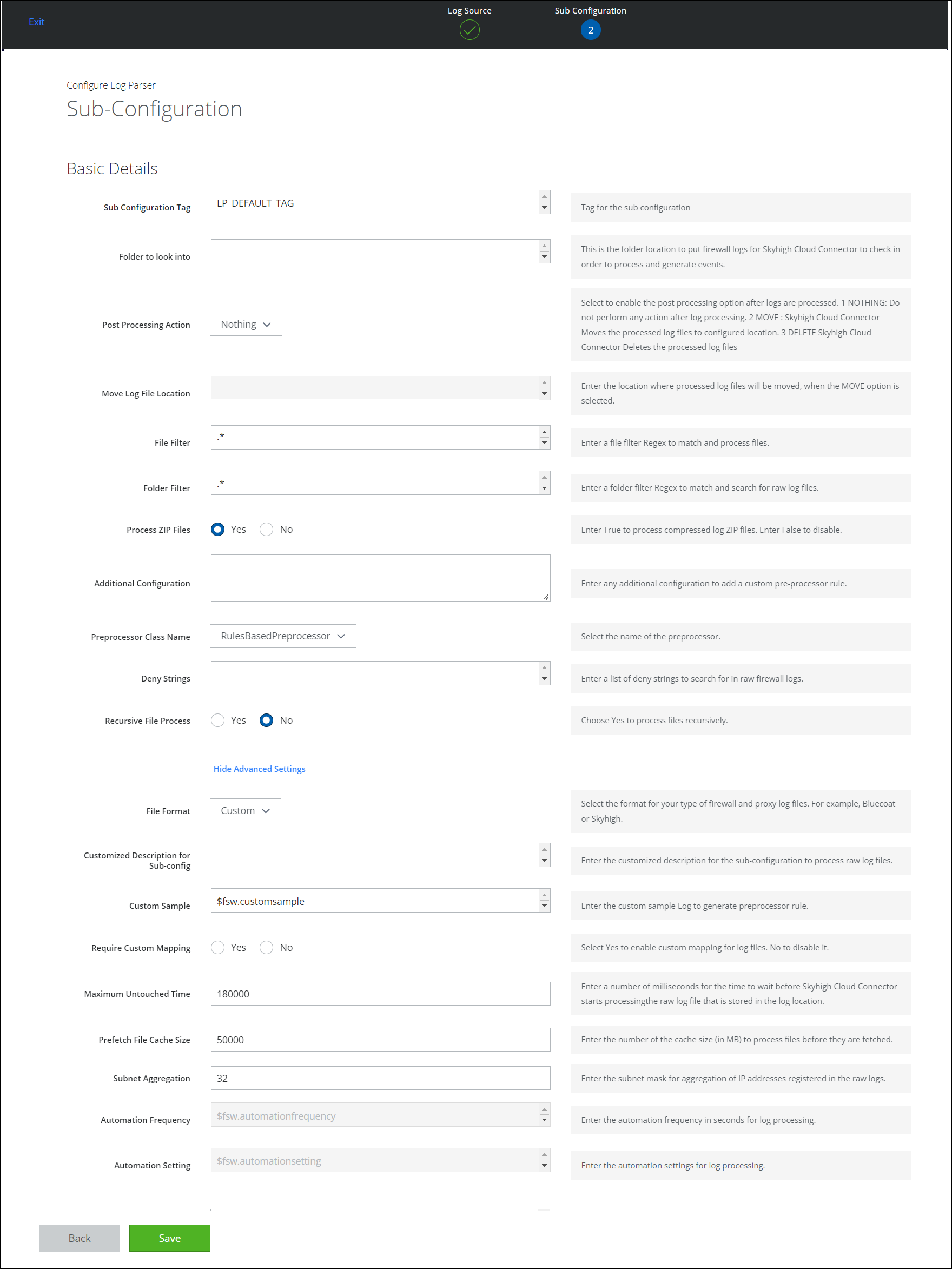

Sub-Configuration

You can review the basic and advanced configurations for your log files and edit the required fields and values. Most of the field values are auto-populated based on the activities performed in the previous steps.

Basic Details

| Fields | Description |

|---|---|

| Sub Configuration Tag | Tag for the sub configuration. |

| Folder to look into | Enter the folder location to put firewall logs for Skyhigh Cloud Connector to check to process and generate events. |

| Post Processing Action |

Select to enable the post-processing option after logs are processed:

NOTES:

|

| Move Log File Location | Enter the location where processed log files are moved you select the MOVE option.

NOTE: When the Post Processing Action for Logs is set to Move, CC provides Move Log File Location (Target Folder) to move all the logs processed into this directory. As an expected behavior, a sub-directory is created with the path of the Folder to look into input merged with an underscore: For example, if the Move Log File Location is: C:\shn\moved_logs, and the Folder to look into path is C:\shn\logs, then the processed logs are moved into the sub-folder created inside C:\shn\moved_logs, which is C:\shn\moved_logs\C_shn_logs. |

| File Filter | Enter a file filter Regex to match and process files. |

| Folder Filter | Enter a folder filter Regex to match and search for raw log files. |

| Process ZIP Files | Select Yes to process compressed log ZIP files. No to disable it. |

| Additional Configuration | Enter any additional configuration to add a custom pre-processor rule. |

| Preprocessor Class Name | Select the name of the preprocessor. |

| Deny Strings | Enter a list of deny strings to search for raw firewall logs. |

| Recursive File Process | Choose Yes to process files recursively. |

Advanced Settings

| Fields | Description |

|---|---|

| File Format | Select the format for your type of firewall and proxy log files. For example, Bluecoat or Skyhigh Security. |

| Customized Description for Sub-config | Enter the customized description for the sub-configuration to process raw log files. |

| Custom Sample | Enter the custom sample Log to generate the pre-processor rule. |

| Require Custom Mapping | Select Yes to enable custom mapping for log files. No to disable it. |

| Maximum Untouched Time | Enter the number of milliseconds for the time to wait before Skyhigh Cloud Connector starts processing the raw log file that is stored in the log location. |

| Prefetch File Cache Size | Enter the number of the cache size (in MB) to process files before they are fetched. |

| Subnet Aggregation | Enter the subnet mask for aggregation of IP addresses registered in the raw logs. |

| Automation Frequency | Enter the automation frequency in seconds for log processing. |

| Automation Setting | Enter the automation settings for log processing. |