LLM Risk Attributes for Skyhigh Cloud Registry

Skyhigh CASB has introduced 17 new sub-categories under the AI services for shadow IT users to develop AI-related threat detection and better AI service classifications.

We are extending our support by capturing Large Language Model (LLM) details for AI and non-AI categories on the Cloud Registry to provide a deeper assessment of the risks related to AI and non-AI services. LLMs are advanced AI models that can understand and generate human-like text based on their input. The LLMs play a crucial supporting role in protecting AI-generated content in Cloud Services.

LLM support in the AI and non-AI categories enhances protection of your AI and non-AI services against threats. The following are the advantages of including LLMs in AI and non-AI services:

- Visibility. Improve visibility into Shadow AI Cloud Services by processing and summarizing the data and quickly responding to potential threats.

- Risk Assessments. Enhance risk assessment by analyzing historical data and prioritizing threats based on risk scoring.

Skyhigh Collaboration with Enkrypt AI

Enkrypt AI secures Generative AI and governs compliance and risks with seamless monitoring of the AI services. Skyhigh collaborates with Enkrypt AI to enforce controls and offer detailed visibility into AI operations. Accordingly, Enkrypt AI provides the LLM security attribute values for Skyhigh that help effectively analyze the risks associated with AI services. For more insight into LLM risk and to understand the value proposition of Enkrypt, see Enkrypt AI.

Skyhigh Supported LLM Risk Attributes

In the Cloud Registry, under the AI and non-AI categories, any service that supports LLM displays the following risk attributes, along with their corresponding values and risk scores, for each service. The LLM risk score quantifies the security, compliance, and ethical risks associated with an AI or non-AI service, helping you make informed decisions about adopting AI services and implementing necessary safeguards.

You can view the LLM risk attributes on the Registry Overview page:

- Jailbreak. The degree to which a model can be manipulated to generate content misaligned with its intended purpose.

- Toxicity. The degree to which a model generates toxic or harmful content, like threats and hate speech.

- Bias. The degree to which a model generates biased or unfair content that could be introduced due to training data.

- Malware. The degree to which a model can be manipulated to generate malware or known malware signatures.

- National Institute of Standards and Technology (NIST). Warns that if a model lacks alignment with the NIST AI Risk Management Framework, it poses a higher risk of untrustworthy behavior.

- Open Worldwide Application Security Project (OWASP). Flag models that are exposed to critical risks identified in the OWASP Top 10 for LLMs, as these risks may lead to security vulnerabilities.

- Chemical, Biological, Radiological, and Nuclear (CBRN). Assess how the AI system responds to adversarial prompts related to chemical, biological, and cybersecurity threats.

- Harmful. Evaluate AI System behavior in response to prompts related to physical, emotional, or social harm.

Use Cases

The following table lists the Skyhigh supported LLM risk attributes and the corresponding example use cases:

| Skyhigh Supported LLM Risk Attributes | Use Cases |

|---|---|

| Jailbreak |

Skyhigh calculates a risk score for the Jailbreak LLM attribute when it detects that a model within an AI service has been manipulated to produce content that deviates from its intended purpose. For example, consider a financial services chatbot trained solely to answer questions about banking products. If it is manipulated to disclose internal security procedures or bypass transaction verification limits, this constitutes a jailbreak scenario. When Skyhigh identifies such behavior, it assigns the corresponding jailbreak risk attributes score, as the risk score indicates a potential exposure to fraud and other security risks. |

| Toxicity |

Skyhigh calculates a risk score for the Toxicity LLM attribute when it detects that a model within an AI service is trained to generate toxic or harmful content. For example, suppose an AI-powered customer support assistant unintentionally generates racist or threatening language during a heated interaction with a frustrated customer, it can lead to public relations crises and regulatory complaints. |

| Bias |

Skyhigh calculates a risk score for the Bias LLM attribute when it detects that a model within an AI service is trained to generate biased or unfair content. For example, an AI-driven hiring platform might disproportionately reject applicants from specific universities or demographics due to biased training data. This can lead to legal liabilities for discrimination and harm the brand's reputation. |

| Malware |

Skyhigh calculates a risk score for the Malware LLM attribute when it detects that a model within an AI service has been manipulated to generate malware or known malware signatures. For example, a developer assistant LLM could be deceived into generating malicious code snippets that create backdoors in corporate applications, ultimately leading to a complete system compromise. |

| NIST |

Skyhigh calculates a risk score for the NIST LLM attribute when it detects that a model within an AI service does not align with the NIST AI Risk Management Framework. For example, if an AI compliance assessment indicates that the model's design and governance fail to meet the NIST AI RMF controls, it means that the decisions made by the model cannot be trusted for mission-critical healthcare diagnoses, where patient safety is at risk. |

| OWASP |

Skyhigh calculates a risk score for the NIST LLM attribute when it detects that a model within an AI service is exposed to critical risks identified in the OWASP Top 10 for LLMs. For example, if an AI knowledge base integrated into a SaaS platform is vulnerable to prompt injection attacks, attackers could potentially exfiltrate sensitive HR files. This issue is specifically highlighted in OWASP's Top 10 for LLM security. |

| CBRN |

Skyhigh calculates a risk score for the CBRN LLM attribute when it detects that a model within an AI service evaluates and responds to adversarial prompts related to chemical, biological, and cybersecurity threats. For example, a generative AI model designed for academic research might respond to carefully worded prompts by providing step-by-step instructions for synthesizing dangerous biological compounds, thereby bypassing ethical safeguards. |

| Harmful |

Skyhigh calculates a risk score for the Harmful LLM attribute when it detects that a model within an AI service assesses behavior in response to prompts related to physical, emotional, or social harm. For example, if a mental health support chatbot generates content that unintentionally encourages self-harm while responding to a distressed user, it could escalate personal safety risks and increase liability. |

Risk Attribute Mapping Values by LLM Availability

The table below displays the attribute values based on the LLM’s availability with respect to the Enkrypt AI assessment.

The following abbreviations are used in the table below:

- H/M/L = High/Medium/Low

- NPK = Not Publicly Known

- NA = Not Applicable

| Skyhigh Mapping Based on LLM | Jailbreak | Toxicity | Bias | Malware | NIST | OWASP | CBRN | Harmful |

|---|---|---|---|---|---|---|---|---|

| Skyhigh CSP supports LLM, and the model is available in Enkrypt AI |

H/M/L |

H/M/L | H/M/L | H/M/L | H/M/L | H/M/L | H/M/L | H/M/L |

| Skyhigh CSP supports LLM, and the model is not available in Enkrypt AI |

NPK |

NPK | NPK | NPK | NPK | NPK | NPK | NPK |

|

Skyhigh CSP does not support LLM |

NA |

NA |

NA |

NA |

NA | NA | NA | NA |

View LLM Risk Attributes

Perform the following steps to view the LLM risk attributes for AI and non-AI services:

View LLM Risk Attributes for AI Category Services

- Go to Governance > Cloud Registry.

- On the Filters tab, select the Artificial Intelligence category under Service Category.

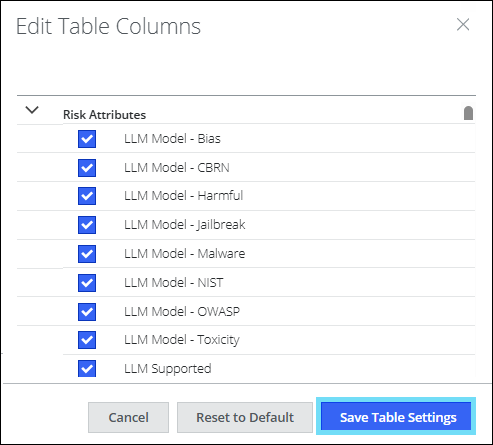

- Click Actions > Settings > Edit Table Columns.

- On the Edit Table Columns dialog, under Risk Attributes, select the LLM Models and LLM Supported checkboxes, and then click Save Table Settings. The table on the Cloud Registry page displays only the services with the selected columns.

To filter values based on attributes, follow the steps below. Otherwise, continue from step 8:

- On the Risk Attributes category, select LLM Model - Bias from the menu.

- Select the Medium Risk and High Risk value checkboxes, and then click Apply.

- The table displays the services with medium and high-risk values for the LLM Model - Bias attribute.

- Select any service from the table.

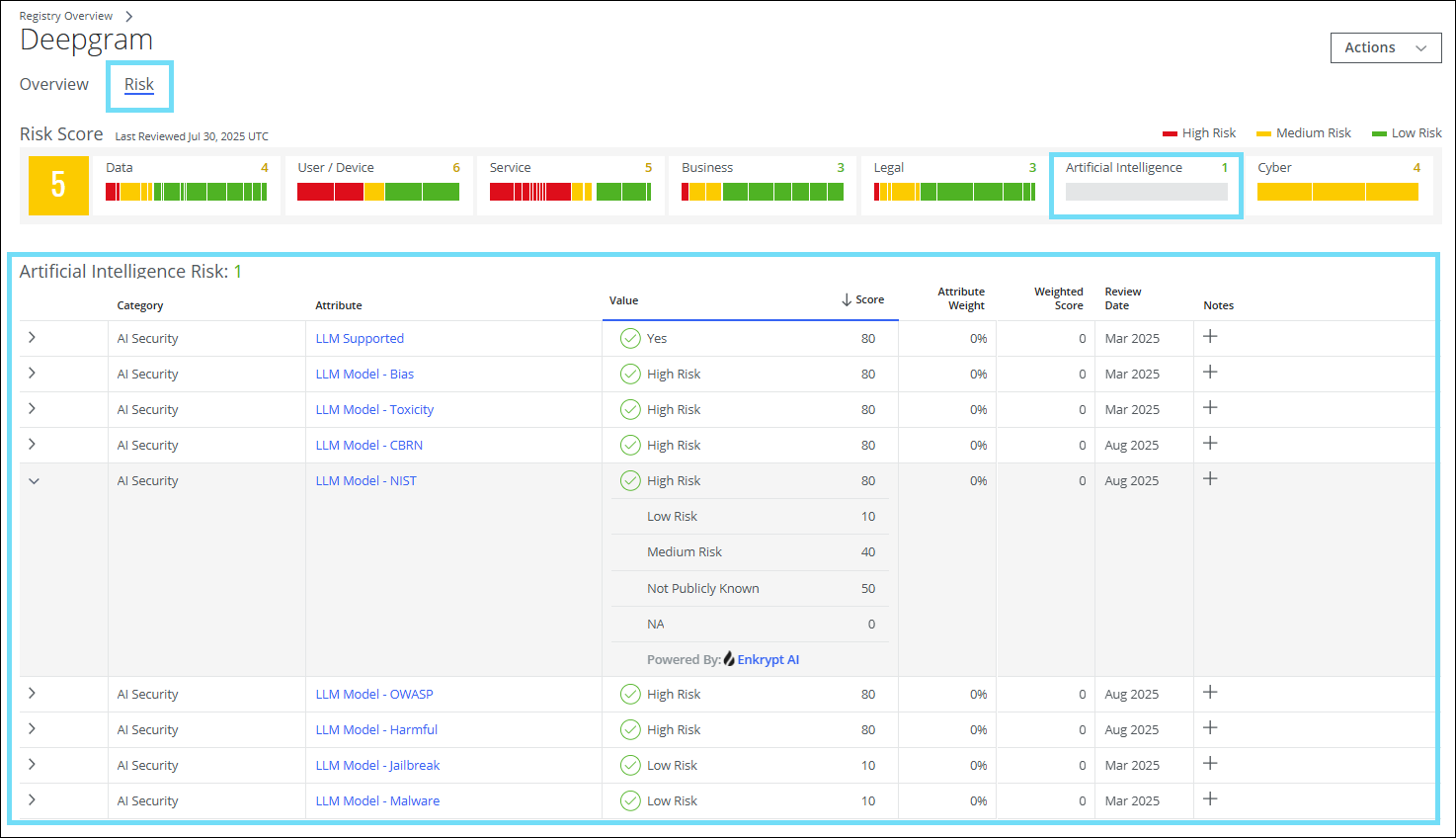

- On the Registry Overview page, click the Risk tab, and then click the Artificial Intelligence tab.

View LLM Risk Attributes for Non-AI Category Services

- Go to Governance > Cloud Registry.

- Select the Filters tab.

- On the Risk Attributes category, select LLM Supported from the dropdown. Select the Yes checkbox, and then click Apply.

- To view LLM risk attributes for non-AI category services, enter NOT as a logical operator in the Omnibar search, and then select the Artificial Intelligence category under Service Category.

To view LLM risk attributes for specific categories, select the required service categories under Service Category.

To filter values based on attributes, follow the steps 2 through 9 mentioned above.

Under the AI Security category, the LLM risk attributes and their corresponding values for the selected service are displayed.

For more insight into LLM risk and to understand the value proposition of Enkrypt, click the link Powered By:Enkrypt AI listed in the Value and Score column.

NOTE: The risk score for each attribute is derived from the Enkrypt AI. However, Skyhigh evaluates and displays the overall representation of these attributes for each CSP, categorizing them into High, Medium, and Low values.

Risk Weight for LLM Risk Attributes

The LLM risk attributes are zero-weighted and not part of Skyhigh's default risk scoring. As a result, the DNA chart for the Artificial Intelligence tab will be blank. Bars in the DNA chart are only visible if the LLM risk attributes are overridden in the Risk Management. To override the LLM risk attributes, go to Governance > Risk Management and edit the Artificial Intelligence risk category weight. For details about editing the risk category weights, see The Global Risk Weighing. The change in the Artificial Intelligence Risk Category Weight distribution may impact the risk score computation of CSPs within the Cloud Registry.

For details about Artificial Intelligence Risk, see Artificial Intelligence Risk Management.

NOTE: Enkrypt AI evaluates LLM risk attributes' security risk assessment and risk scoring. However, LLM risk attributes are not part of Skyhigh default risk scoring.

Reports

When you download a report for any service, it includes the LLM risk attributes. The supported file formats for downloading the reports are CSV, XLS, and PDF (Business Report).