Best Practice - Configuring Central Management Node Groups

In a cluster where multiple Secure Web Gateway appliances run as nodes, these nodes are assigned to node groups to enable different methods of communication between them. Node groups can also include nodes running in different physical locations.

To ensure the efficient use of node groups in a cluster, the following must apply:

- Appropriate routes are configured in your network to allow communication between nodes.

If nodes in different locations are protected by firewalls, they must allow use of the port that is configured on each node for communication with the other nodes. The port number of the default port is 12346.

- Time is synchronized. Node communication depends on this when it is determined which node has the most up-to-date configuration.

We highly recommend that you configure the use of an NTP server on each node for automatic synchronization. This is done as part of configuring the Date and Time settings of the Configuration top-level menu.

If you are not using an NTP server for your network, you can configure the default server that is provided by Skyhigh Security at ntp.webwasher.com. -

The same version and build of the Secure Web Gateway appliance software is running on all appliances that are configured as nodes.

Small Sample Configuration

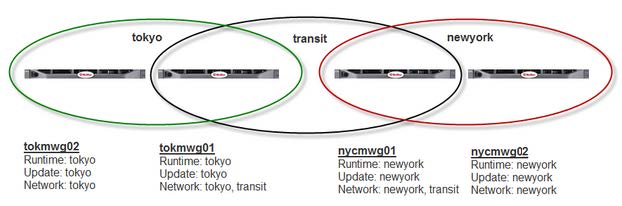

In this sample configuration, there are two different locations (Tokyo and New York) with two nodes each. In both locations, the nodes are assigned to their own runtime, update, and network groups. The group names are tokyo and newyork, respectively, for all types of groups.

One node in each location is also assigned to the transit network group, which is the same for both locations.

The following diagram shows this configuration.

The following is achieved in this configuration:

-

Policy changes that an administrator configures on any node are distributed to all other nodes, due to the existence of a transit group node in each location. This ensures the web security policy remains the same on all nodes.

The changes are transferred, for example, from the non-transit node in New York to the transit node because both are in one network group. They are then transferred from this transit node to the node in Tokyo, again, because both are in one network group, the transit group.

Finally, the changes are transferred from the Tokyo transit node to the other node in this location. -

Updates of anti-malware and URL filtering information for the respective modules (engines) of Secure Web Gateway are only distributed between nodes in Tokyo and between nodes in New York.

This allows you to account for differences in the network structure of locations, which is advisable regarding the download of potentially large update files.

Nodes in one location with, for example, fast connections and LAN links can share these updates, while they are not distributed between these nodes and those in other locations with, for example, slower connections and WAN links.

We generally recommend that you include only nodes of one location in the same update group. -

Runtime data, for example, the quota time consumed by users, is only distributed between nodes in Tokyo and between nodes in New York.

This makes sense, as probably users in one location will only be directed to the local nodes when requesting web access. So it would not be required for a node in New York to be informed about, for example, the remaining quota time of a user in Tokyo.

If the nodes in one location are assigned to different user groups with regard to their web access, you can also configure these nodes in different runtime groups to avoid an information overhead on any node.

Larger Sample Configuration

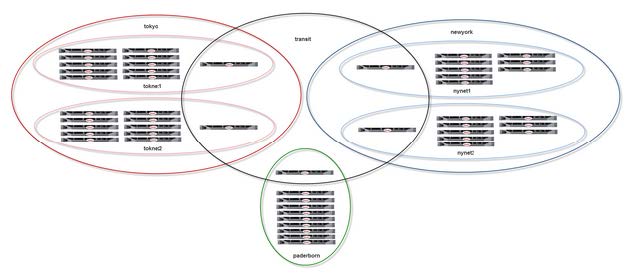

Not more than 10 nodes should be configured for a network group together with a transit node. This means that in larger locations, you need to configure more than one node for the transit network group.

In the following sample configuration, there are 22 nodes in one location (Tokyo), which are split into two network groups (toknet1 and toknet2), both of which include one node that is also a member of the transit group.

The 18 nodes in the second location (New York) are configured in the same way, whereas the 9 nodes in the third location (Paderborn) are all in one network group with one node that is also in the transit group.

The diagram below shows this configuration.

Regarding runtime and update node groups, there is one of each type for every location.

Policy changes, updates of anti-malware and URL filtering information, as well as sharing of runtime data are handled in the same way as for the smaller sample configuration.

Alternative Configuration of Nodes with Transit Group Functions

You can configure nodes that perform the functions of nodes in a transit group without formally creating a transit group as a group of its own.

If you have, for example, two groups of nodes, each of which is configured as a network group, you can configure one of the nodes in each group to be a member not only of its own, but also of the other network group.

The nodes that are configured in this way will perform transit group functions. For example, they will distribute policy changes that the administrator applies on one node to all other nodes in the two groups.

Best practice for smaller node groups ist to configure one node as a member of its own group and of the other group or groups. For larger node groups, configure more than one node with multiple memberships for every node group.