Network Down on Node in central management

Title: Network Down on Node in central management

Category / Product: Webgateway

Environment: Central Management

Central Management allows you to administer multiple Web Gateway appliances in your network as nodes in a common configuration. A configuration of multiple appliances administered through Central Management is also referred to as a cluster.

When administering a Central Management cluster, you are dealing mainly with:

• Nodes — Appliances run as nodes that are connected to each other sending and receiving data to perform updates, backups, downloads, and other jobs.

• Node groups — Nodes are assigned to diferent types of node groups that allow different ways of transferring data.

• Scheduled jobs — Data can be transferred according to time schedules that you configure.

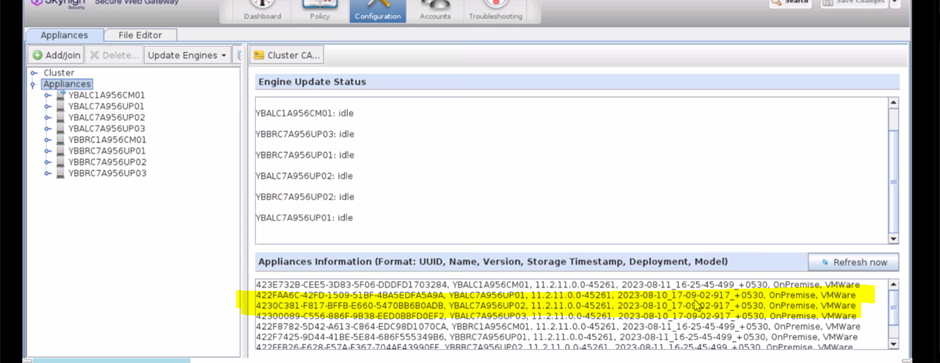

In the Above Diagram, SWG Cluster has 2 Network Groups i.e Network Alpha and Network Bravo.

Network Alpha has 3 nodes and 1 cluster Management Node:

YBALC1A956CM01

YBALC7A956UP01

YBALC7A956UP02

YBALC7A956UP03

Network Bravo has 3 nodes and 1 cluster management node:

YBBRC1A956CM01

YBBRC7A956UP01

YBBRC7A956UP02

YBBRC7A956UP03

Issue: Network Drop for the Node YBALC7A956UP01 (Alpha Group). This impact on the production traffic creating instability in the network.

In the below Figure we can observe that Traffic drops to Zero Every 15 minutess and regains back to normal.

Causes:

1. Cluster Nodes if not in sync will create this problem. An in sync cluster will show the same Current Storage Timestamp for all nodes.

In below figure we can see that 3 nodes are not in sync with rest of the nodes . There is variation in the timestamp

2. Secure Web Gateways experience an intermittent network issue while one node attempts to transmit shared data to another node (i.e., TCP handshake failed or node wasn't reachable).

Troubleshooting and checks to be carried out:

Verify the synchronization of nodes

The user interface displays, among other general information, a timestamp for each node in a Central Management Configuration, which allows you to verify whether all nodes are synchronized.

Task

1 Select Configuration | Appliances.

2 On the appliances tree, select Appliances (Cluster). Status and general information about the configuration and its nodes appear on the settings pane. Under Appliances Information, a list is shown that contains a line with information for every node. The timestamp is the last item in each line.

3 Compare the timestamps for all nodes. If they are same for all nodes, the Central Management configuration is synchronized.

You can check your NTP settings from the GUI under Configuration > Date & Time. If your time is too far out of sync (more than ~1000 seconds) then NTP will not sync automatically

Or from SSH Console using command

timedatectl

If not in sync then Force a sync (MWG 7.3 and above):

service ntpd stop service ntpdate start service ntpd start

Examples:

Time out of sync, Version mismatch, Network problems.

Here is an example error: Time out of sync

[2023-08-11 16:52:55.938 +05:30] [Coordinator] [MessageWillRespondWithError] Will respond to message <co_distribute_init_subscription> with error: (405 - 'time difference too high') - "time difference too high: 2023-08-11T16:52:00.732+05:30 / 2023-08-11T16:52:55.937+05:30".

Things to check for in cluster settings: distribution Timeout, distribution Timeout Multiplier, allowed Time Difference, check Version match value on all cluster members.

In our Example Since the other parameters were correct, we had to make change for time difference to 300 seconds so that configuration changes can be accepted. Post making the changes the nodes were synchronized and getting the updates, no network fluctuations observed for the reported node.

clipboard_ec4d4b0422ce1f7d840e07b40aedb935b.png

Considerations

- Once a cluster is established, you can only access one GUI at a time

- All changes made by that GUI are synced to all other cluster members

- The system time is important in the CM cluster.

- if the times get out of sync (more than 2 minutes), CM communication will fail, and errors will be reported

- All appliances should be running the same version & build. It is never recommended to mix versions in a Central Management cluster